Machines have long been known for their precision, speed, and ability to process complex data, but never for their empathy. However, with the rise of Emotional AI, that perception is changing fast. According to recent market analysis, the global Emotional AI market was valued at around USD 2.1 billion in 2024 and is projected to grow at a compound annual growth rate (CAGR) of about 22.9% through to 2033. Today, technology doesn’t just understand commands; it’s beginning to understand feelings.

Think about it. Have you ever received a recommendation from a virtual assistant that just “felt right”? Or noticed how some chatbots seem to respond differently depending on your tone? That’s Emotional AI, technology that senses, interprets, and reacts to human emotions.

In a digital world where personalisation defines success, Emotional AI is helping brands and businesses create experiences that feel more human and emotionally intelligent. Let’s explore how this incredible technology is reshaping human–machine relationships and why it’s becoming the future of interaction.

What is Emotional AI?

Different from traditional AI, which only analyses logic and data, Emotional AI analyses the complexities and intricacies of human communication, which consist of different components such as facial expressions, voice tones, eye gestures, choice of words and even heart and skin temperature. These elements generate algorithms that conclude if an individual is happy, angry, stressed, or sad.

Think about AI identifying frustration in your voice to frustrate your customer common problem. Instead of responding with a common script, AI would respond in a more understanding manner and even notify a human when your frustration is too high to help. That’s called empathy, not emotion simulation.

How is Emotional Intelligence Measured?

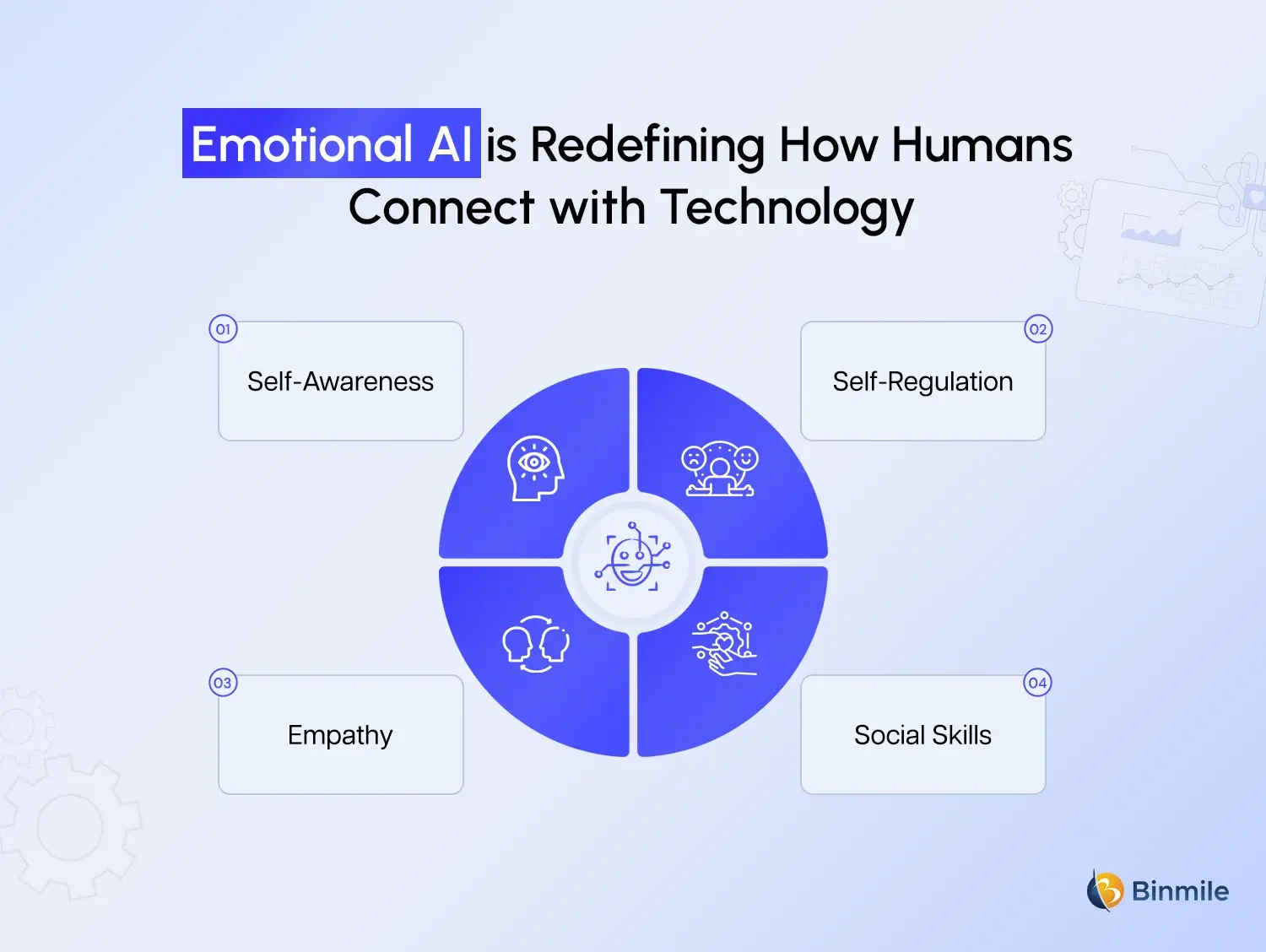

To understand Emotional AI better, we must first understand how emotional intelligence is measured in humans.

Human emotional intelligence (EI) refers to our ability to recognise, understand, and manage emotions, both our own and those of others. It plays a crucial role in communication, relationships, and decision-making. Psychologists Daniel Goleman and Peter Salovey popularised the concept, breaking it down into four key dimensions:

- Self-awareness: Knowing one’s own emotions and how they affect actions.

- Self-regulation: Managing impulses, staying calm under stress.

- Empathy: Recognising others’ emotions and responding appropriately.

- Social skills: Building healthy interpersonal relationships.

Emotional Intelligence (EI) in humans is assessed through self-reports, observational studies, and performance tests. Tools like the EQ-i (Emotional Quotient Inventory) and the MSCEIT (Mayer-Salovey-Caruso Emotional Intelligence Test) are highly regarded in the field.

How is this connected to machines?

Emotional AI uses technology to replicate these same functions. Facial recognition AI, for example, analyses micro-expressions to understand feelings. Certain AI tools evaluate voice to measure tone, pitch, and pacing for emotional analysis. Sentiment analysis tools in NLP determine the emotional tone of text. Basically, Emotion AI reviews data to determine the emotions in the same way people do.

This technology is what enables machines to understand our emotions and respond to us in more sophisticated ways.

The Science Behind Emotion AI

So, how does it all work behind the scenes? Emotion AI systems typically involve three main steps:

- Data Collection: Cameras, microphones, or sensors collect emotional data such as voice, facial expression, or body movement.

- Feature Extraction: The system identifies emotion-related markers like raised eyebrows, tense voice, or specific keywords.

- Emotion Classification: Machine learning models classify these markers, happiness, anger, sadness, disgust, or surprise, and respond accordingly.

Let’s take an example,

When you talk to a virtual assistant in an irritated tone, the AI can recognise negative sentiment and switch to a calmer, more reassuring voice. Similarly, when a student looks confused during an online lesson, the system can repeat the concept with simpler language.

This continuous loop of sensing, analysing, and adapting enables AI systems to improve their empathy with each interaction.

Emotion Artificial Intelligence in Action

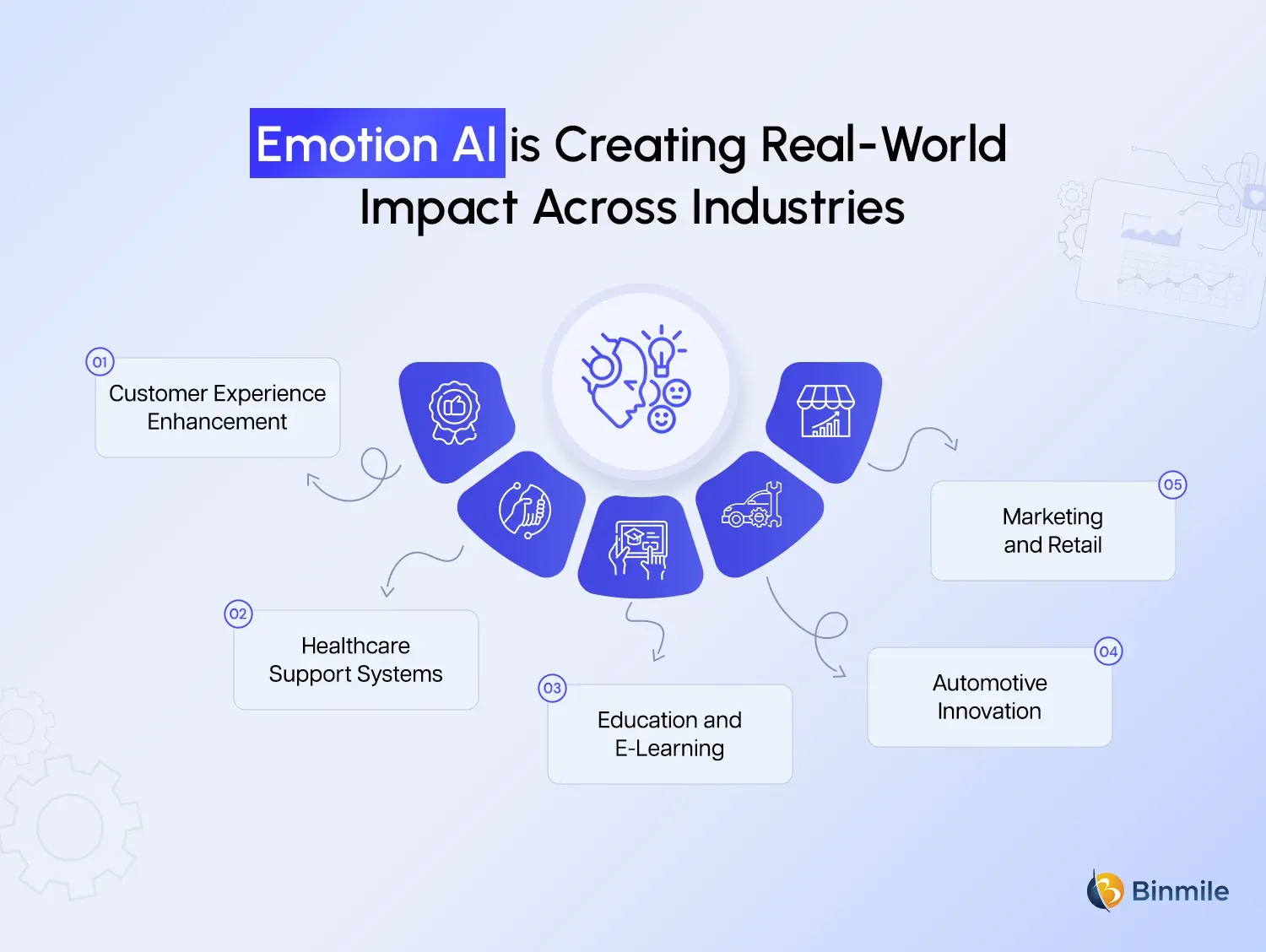

The practical applications of emotion artificial intelligence span nearly every industry — from healthcare to education to marketing. Let’s look at how it’s creating impact:

1. Customer Experience Enhancement

Emotional AI are programmed to identify annoyance and despondence responsible for enhancing customer experience with AI. These bots not only identify and adapt to changes in emotions, but can even escalate calls to humans when sentiment changes to anger. Overly aggressive customers are swiftly handled by human support representatives, as AI enables them to recognise user emotions.

2. Healthcare Support Systems

Emotion AI analyses patients’ facial and vocal expressions and determines psychological states. Early indicators of anxiety and depression can be detected and documented by Emotional AI during important therapy sessions. These systems provide vital, integrated mental health care and personalised care.

3. Education and E-Learning

Virtual tutors provide emotional AI-powered templates that adapt and optimise lesson delivery in real time. Automated systems adapt to changes in facial and vocal expressions to identify and respond when lesson content becomes cognitively overwhelming or distracting.

4. Automotive Innovation

New automotive systems use Emotional AI to determine driver sentiment and mental states. Environmental systems can be adjusted based on detected emotions to eliminate frustration and low arousal states. AI in travel industry can enhance personal experience, efficiency and customer service automation.

5. Marketing and Retail

Emotion AI technology determines and tracks sentiment about brand offerings like advertisements and products. Automated systems adjust to ensure that changes resonate with the marketed product or advertisement.

Looking to collaborate with one of the top emotional AI development companies? Let Binmile help you design emotion-driven digital experiences that truly connect with your audience.

Social Intelligence vs Emotional Intelligence

Although they are frequently used interchangeably, let’s break down social intelligence vs emotional intelligence as different concepts.

Social intelligence involves understanding and managing social relationships, knowing appropriate social behaviour and how to navigate social structures. By contrast, emotional intelligence centres on understanding individual human feelings, yours and others.

When integrated into AI, these two types of intelligence enable machines to understand emotions and the circumstances that surround them. For instance, an angry customer may exhibit frustration during a transaction, but social intelligence enables the system to determine whether the anger is about the product, the brand, or something else entirely.

Emotional intelligence in artificial intelligence integrates both types of intelligence, allowing technology to interpret both the emotional state of a person and the social situation they are in at the same time.

Emotion in AI: The Shift Toward Empathy

The incorporation of emotions in artificial intelligence (AI) is a transformative advancement on both a technological and cultural level. It reflects a change from detached, data-centric systems to emotionally intelligent interfaces capable of grasping the user’s feelings more profoundly.

The communication between humans and machines has been, until very recently, a one-way and transactional affair. You issue a command and the machine carries it out, without any questions. With the advent of Emotional AI, that relationship is set to become multiplicitous and interactive. Machines will not merely follow verbal commands, but also respond emotionally.

The potential of this technology is unprecedented in customisation and personalisation. Emotion in AI will become the centrepiece technology in trust-centric industries, such as healthcare, education, finance, and retail. Emotionally intelligent systems will, in real time, soothe an exasperated customer, encourage a discouraged student, or support and guide a patient emotionally during difficult phases.

The addition of emotional sensitivity to AI systems is a significant step in eliminating the gaps between artificial intelligence and artificial empathy.

Challenges in Implementing Emotion AI

Even with its challenges, Emotion AI is enthusiastic:

- Cultural Bias: Emotions are expressed differently depending on the region. AI interprets emotions differently depending on the situation.

- Ethical Considerations: Constantly triggered AI raises ethical questions regarding privacy, consent, and monitored data.

- Complexity: Emotions are intricate and layered. A simple smile can mask true feelings, and sarcasm can thwart a positive tone.

- Data Sufficiency: Emotion AI is driven by large volumes of data. This data must be ethically sourced, fairly priced, and accurately classified to achieve justice.

Continuous, responsible AI development is slowly removing barriers toward more systems that are Emotion AI-ready.

The Future of Emotion in AI

There will be an expansion of Emotional intelligence in artificial intelligence beyond just personal assistants and chatbots in the coming decade. Think about virtual doctors who can detect anxiety in patients during a teleconsultation. Consider HR software that evaluates employee morale during digital interactions. Picture smart classrooms that detect student stress during exams.

The objective of advancing technology is not to substitute human emotions. It is to strengthen the bonds of understanding between humans and machines. When coupled with ethical frameworks and transparent data, Emotion AI can change the meaning of digital empathy.

Transform your product with Emotion AI. Partner with Binmile today and make your technology more human, adaptive, and empathetic.

How Can Binmile Help Build Emotionally Intelligent Digital Solutions?

If your business is ready to move beyond logic-driven systems and embrace empathy in technology, Emotion in AI is your next step, and Binmile is your ideal partner. While Emotion AI may sound futuristic, it is already becoming a part of how modern businesses interact with users, and companies that combine intelligent data handling with smart automation are leading this change.

That is where Binmile comes in. As a trusted technology partner specialising in AI-powered applications using artificial intelligence as a service, and custom software development, Binmile helps organisations design systems that better understand user behaviour and context, the first step toward building emotionally-aware experiences.

Through its focus on intelligent automation and data-driven personalisation, Binmile enables brands to:

- Build smarter conversational interfaces that adapt tone and response based on user sentiment.

- Integrate analytics that interpret behavioural data to improve engagement.

- Create digital solutions that feel more intuitive and user-centric.

Binmile supports the same principles driving Emotion AI, understanding users beyond basic interaction and creating experiences that respond to human intent. If you’re exploring how empathy and intelligence can coexist in your digital products, Binmile can help you get there, thoughtfully, and with technology that listens as much as it performs.

Frequently Asked Questions

AI affects empathy by helping machines understand and respond to human emotions better. For example, AI can detect if a person sounds sad or happy and reply in a caring way. This helps improve how people connect with technology, like chatbots or virtual assistants. However, AI does not feel emotions however it just learns patterns from data to act in an empathetic way.

Machines detect emotions using emotion recognition technology. They study signals like facial expressions, voice tone, body language, text and words used in chats and messages. By analysing these cues with AI models, machines can guess how a person feels and respond accordingly.

Machines do not have real feelings or emotional understanding. They respond kindly based on what they’ve learned from data, not from genuine care. So while AI can sound empathetic, it doesn’t truly feel empathy like humans do. So, we can say it is just simulated empathy.

The main technology is Natural Language Processing (NLP). NLP allows machines to read, understand, and respond to human language. It helps AI pick up emotions from words, tone, and context, making conversations more natural and empathetic.